Posts

-

Far More Research Into Making Neopoints Than Anyone Needs to Know

CommentsThe new management of Neopets is trying to start a Neopets Renaissance. They’ve brought back Flash games, thanks to the Rust-based flash emulator Ruffle. New content is coming out, with an acknowledgement that the player base is mostly nostalgic adults rather than new kids. Although the site aims to maintain its kid-friendly exterior, the inside has changed. These days, the typical Neopets user is a mid 20s woman that’s more likely to be LGBT than straight. (See this unofficial demographics survey.) The most recent Faerie Festival was incredibly queer coded, to the point I’d be shocked if it weren’t intended.

I have slightly mixed feelings about all the changes. I like Neopets as a time capsule of the early 2000s Internet, and every change erodes that image. Still, expecting the site to stay the same forever was never a realistic assumption to have.

It’s too early to tell if the Neopets Renaissance is real, but a decent number of people are coming back, many with the same question: how do I afford anything?

Buddy do I have the post for you!

You know how much time I have spent studying how to squeeze Neopoints water out of the Neopets stone? WAY TOO MUCH TIME. Like really I’ve spent too much. I should stop.

Part of your duty to humanity is that if you spend a bunch of time learning something, you should teach what you’ve learned so that other people don’t have to go through the same struggle. Yes, even if it’s about Neopets.

Most mega-rich Neopians made money from playing the item market. Figure out the cost of items, buy low sell high, read trends based on upcoming site events, and so forth. When done properly, this makes you more Neopoints than anything else. It’s similar to real-world finance, where there can be very short feedback loops between having the right idea and executing on that idea for profit.

Also like real finance, doing so requires a baseline level of dedication and activity to keep up-to-date on item prices, haggle for good deals, and get a sense for when a price spike is real or when it’s someone trying to manipulate the market. There is no regulation, with all the upsides and downsides that implies.

This post is not about playing the market, because any such advice would be very ephemeral, and also,

Instead, this is about ways to extract value from the built-in site features of Neopets. We are going to do honest work and fail to ever reach the market-moving elite class and we are going to be fine with that. Everything here is doable without direct interaction with any other Neopets user, aside from putting items in your shop to turn them into pure Neopoints.

I’ve tried to limit this to just things that I think are worth your time. There are tons of site actions that give you free stuff, except the stuff is all junk.

Daily Quests

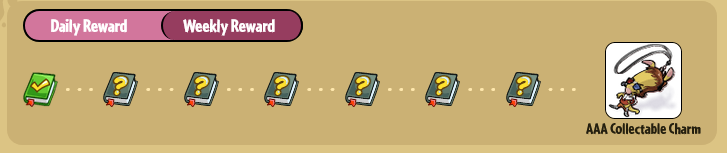

These are super new, just a few weeks old, and are the reason I started writing this post at all.

Each day, you get five daily quests. These are small, tiny tasks, like “play a game” or “feed a pet”. Each quest gives a reward, either Neopoints or an item from the daily quest pool. Doing all 5 dailies gives a 20k NP bonus. I’d say you can expect to get about 25k NP a day, depending on how many NP rewards you get.

The weekly reward is the thing that’s special. If you do all daily quests 7 days in a row, you get a weekly reward, and the weekly rewards are insane. The prices are still adjusting rapidly, but when they first launched, my weekly reward was a book valued at 40 million NP. By the time I got it, the price had dropped like a rock, and I sold it for 450k NP. That book now sits at 200k NP.

The prices of weekly rewards will likely keep dropping, and my guess is that the weekly prize will end up around 150k NP on average. If we assume the daily quests give 25k NP per day, and do them every day for week, we get an average of (25,000 + 150,000/7) = 46,400 NP/day.

Aside: Item Inflation

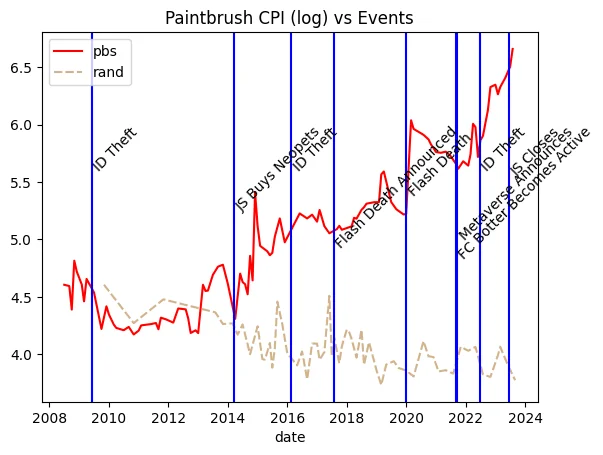

For various reasons, money doesn’t leave the Neopets ecosystem as fast as it should, and some items are rarer than they should be. It’s not a total disaster, but it is bad. There are some nice plots made by u/UniquePaleontologist on Reddit charting item prices going back to 2008, showing trends over 15 years of data! To quote the post,

my background is in biology and data science not economics but I do love my broken html pet simulator

This chart is inspired by the consumer price index, and plots paintbrush prices over time. Paintbrush prices are the red curve, and a random basket of 1000 items is the gray curve. This is in log scale, so increasing the y-axis by 1 means multiplying the price by 2.7x.

We see that random items have trended down over time, but paintbrushes have gone crazy. Looking from 2014 to 2023, paintbrush prices have increased by around 7.4x. This is almost a 25% year-over-year increase. A similar trend appears in stamps and other rare collectibles.

The common interpretation of the weekly quests is that TNT is directly fighting item inflation, by picking rare items and drastically increasing their supply. You could interpret this as TNT listening to their userbase and making items more accessible, or you could view it as TNT desperately trying to get users interested in playing again. The two are not mutually exclusive!

My interpretation is closer to the latter. The way weekly quests are implemented feels straight out of a gacha game. You have to play every day, you do minor tasks that each feel like they don’t take time but add up to real time in total, and the quests direct you to interact with parts of the site that could either keep you around (Flash games) or encourage you to spend money (pet customization). I’ve already been tricked into playing a Flash game to clear a daily quest, then playing it again just for a high score.

Still, if they are going to pander to me with good items, I will take the good items. I never said these gacha-style techniques didn’t work.

Just six more days…

Food Club

We now move to the exact opposite of Daily Quests. Daily Quests were created incredibly recently, with a partial goal of fighting item inflation, and intelligently use techniques from a mobile gaming playbook.

Food Club is a dumb system, made 20 years ago without any conception towards the long-term health of the site, and is a major reason the economy has so much inflation.

Think of Food Club as like betting on horse racing. No, scratch that, it’s literally betting on horse racing. Except the horses are pirates and the race is competitive eating. There are 5 arenas, each with 4 pirates that compete for who can eat the most food. You can make 10 bets a day, and earn Neopoints if your bets are correct.

There is some hidden formula defining win rate that I’m sure someone has derived from data, but, in general, Neopets displays the odds for each pirate and you can assume the odds for a pirate match its win probability. A 4:1 pirate will have a win rate of about 25%, and betting on them is zero expected value.

(Normally, gambling odds account for returning your wager, and 5:1 odds pays out 5+1=6 on a win and 0 on a loss, corresponding to a 1/6 win probability. Food Club never returns your wager, so 4:1 pays out 4 and means a 1/4 win probability.)

Neopets always rounds pirate odds to the nearest integer, and never lets them go lower than 2:1 or higher than 13:1. We can use this to our advantage.

Suppose we have an arena like this:

Pirate 1 - 2:1

Pirate 2 - 6:1

Pirate 3 - 13:1

Pirate 4 - 13:1This is a real arena from today’s Food Club, at time of writing. Let’s figure out the 2:1 pirate’s win probability. We know the 6:1 pirate wins around 1/6 of the time, and the two 13:1 pirates win around 1/13 of the time. (Possibly less, but let’s be generous.) The 2:1 pirate wins the rest of the time, so their win probability should be around

\[1 - \frac{1}{6} - \frac{1}{13} - \frac{1}{13} \approx 67.9\%\]If Neopets didn’t round, the true payout for this pirate should be around 1.47:1. But thanks to rounding, the payout is 2:1 and betting on this pirate is positive expected value. We’d get an expected profit of 35.8%.

Food Club strategy is based on identifying the positive expected value pirates and exploiting them as much as possible. Finding them is easy (you do the math above for each arena), but deciding how risky you want to play it is where strategy comes in. If you don’t want to learn strategy, there are plenty of “Food Club influencers” who publicly post their bets each day on Reddit or Discord, and you can just copy one of them. Generally you can expect an average profit of 70%-90% of what you bet each day, although you should only start doing Food Club when you have enough of a bankroll to absorb losses. Around 50x your max bet size should be good enough (covers up to 5 straight days of total busts).

The maximum bet size you can make is 50 plus 2 times your account age, in days. Let’s suppose you successfully recover your account that’s 15 years old. You’d be able to bet 11,000 NP per bet. With 10 bets per day and an 80% expected profit, that’s (10 * 11,000 * 0.8) = 88,000 NP/day.

This is a lot. Who knows why TNT decided to make max bet size dependent on account age, but it creates a real divide between users who recover their old account and users who give up and start fresh. It’s also incredibly easy to automate. u/neo_truths is a grey hat hacker who has access to Neopets source code and database logs, and occasionally discloses deep dives into Neopets data on Reddit. They’ve revealed that based on request logs, it’s very likely there is a Food Club botnet. Thanks to the (many) Neopets data breaches, and lax Neopets security standards, there are a lot of vulnerable accounts out there. The botter has presumably broken into many old, abandoned accounts, took everything they had, and converted them into Food Club players.

-

They have stolen over 40k accounts already (started late 2021) and keep stealing hundreds every week

-

Sum of historic food club profit having profit > 100k: 224b with 14.5k accounts

-

Sum of bank balance having balance > 100k: 51b with 14.5k accounts

-

Sum of on hand balance having balance > 100k: 2b with 1.5k accounts

-

Sum of shop balance having balance > 100k: 4b with 125 accounts

From here

This botnet is creating literally billions of NP each day, selling it for real money on black market sites, and pumping NP into the economy. It’s pretty likely this is why item inflation has took off. It’s a real problem, but I’m not sure TNT has an easy way out. Food Club is the recommended NP making tool for anyone who comes back to the site. Taking away free money is hard, and it’s a site feature that’s stayed the same for so long that it has its own inertia. They might revamp Food Club, but for now, go bet on those pirates.

Trudy’s Surprise

This is a daily they added in 2015, and just gives away more Neopoints.

Each day, you spin the slot machine and get NP based on how many icons you match. Matching 0 icons vs all 4 icons is only a difference of 2k to 3k NP - the main trick is that the base Neopoints value quickly rises with the length of your streak, reaching 18.5k NP/day starting at day 10 and a guaranteed payout of 100k on day 25. The streak then resets. Trudy’s Surprise also gives items for 7 day streaks, but these items are usually junk and not worth anything.

The payout table is listed on JellyNeo. We’ll assume that you make 0 matches every day and get to day 25 of the streak. This will include one Bad Luck Bonus, an extra 2.5k paid the first time you get 0 matches during a streak.

Averaging over the payout table, we get to 17,915 NP/day. Most of the money is made between Day 10 and Day 25.

Battledome

Ah, the Battledome. You play long enough, you end up with one high-end outlet. For me that was the Battledome. Top tier items can literally extend up to over a billion Neopoints.

The Battledome went through a revamp in 2013. Diehard fans usually don’t like this revamp. It removed HP increase from 1-player (which destroyed most single-player competition), removed complexity that the 2-player community liked, and introduced Faerie abilities so broken that the majority are banned in what little exists of the PvP scene.

The one good thing it brought was fight rewards. When you win against CPU opponents, you earn a small amount of Neopoints and an item drop. The item drops can include codestones, which you can use to train your pet. Or, you could just sell them directly. You get 15 item drops per day.

For a long time, the exact mechanics of drop rates was not fully known, aside from users quickly finding that the Koi Warrior dropped codestones way more often. Over time, people found that there are arena-wide drops, which come from every challenger in that arena, and challenger-specific drops, which depends on which challenger you fight.

There are a few sources of Battledome drop data:

- A list of Battledome prizes run by JellyNeo’s Battlepedia, which lists all arena-specific and challenger-specific prizes, but without their drop rates.

- A crowdsourced dataset of Battledome drops summarized here. This gives exact rates for codestone drops, but only aggregates the data into “codestone” versus “not-codestone”. Through this, we know find the arena of the opponent is the most important factor for codestone drops, and there’s little variation in drop rate for different challengers in that arena. The Koi Warrior is in the Dome of the Deep, the arena with highest codestone drop rate.

- Much later, u/neo_truths posted a leak on the drop rates and drop algorithm. This gives exact rates for the arena-wide drops, but has no data on challenger specific drops.

First let’s check the validity of this leak. Using data from that post, I coded a Battledome drop simulator, and ran it to sample 100k items from each arena. You can download the Python script here. Let’s compare this to the crowdsourced codestone drop rates.

Arena Codestones (crowdsourced) Codestones (simulator) Frost Arena 17% 19.9% Cosmic Dome 15% 17.1% Pango Palladium 14% 16.6% Rattling Cauldron 24% 25.0% Central Arena 11% 12.0% Neocola Centre 18% 20.3% Dome of the Deep 30% 34.1% Ugga Dome 14% 15.3% Overall the leak looks pretty legitimate! The simulator consistently overestimates the rate of codestone drops, but this makes sense because it pretends challenger-specific drops don’t exist. Every time you get a challenger-specific drop, you miss out on an arena-wide drop, and codestones only appear in the arena-wide item pool.

The reason I care about verifying this leak is that the Battledome drops more than just codestones, and I want a more exact estimate of expected value from Battledoming. Before going forward, we’ll need to make some adjustments. On average, the simulator codestone drop rate is around 89% of the true crowdsourced drop rate. So for upcoming analysis, I’ll multiply all drop rates from my simulator by 89%. (One way to view this is that it assumes challenger drops are 11% of all item drops, and all such drops are worth 0 NP.) To further simplify things, I’ll only count a few major items.

- Codestones dropped by every arena. Training school currency.

- Dubloon coins dropped in Dome of the Deep. Training school currency.

- Armoured Neggs dropped in Neocola Centre. Can be fed to a Neopet for +1 Defense.

- Neocola tokens dropped in Neocola Centre and the Cosmic Dome. Can be gambled at the Neocola Machine, which has a chance of giving a Transmogrification Potion.

- Nerkmids from the Neocola Centre and Cosmic Dome. Can be gambled at the Alien Aisha Vending Machine, where has a chance of giving a Paint Brush.

- Cooling Ointments from the Frost Arena. Can cure any disease from your Neopet.

Using JellyNeo’s estimated item prices at time of writing, we can find which arena gives the most profit, assuming you play until you get 15 items.

Arena Expected Profit Central Arena 26,813 NP/day Ugga Dome 27,349 NP/day Frost Arena 35,428 NP/day Pango Palladium 38,904 NP/day Rattling Cauldron 48,521 NP/day Neocola Centre 57,408 NP/day Dome of the Deep 69,046 NP/day Cosmic Dome 135,557 NP/day I should warn you that getting to 15 items a day requires a lot of clicks, but the profit available is very real. The Cosmic Dome is clearly best, but you can only fight those challengers if you have Neopets Premium. Assuming you don’t, you’ll want to fight the Koi Warrior in the Dome of the Deep instead.

A default untrained pet won’t be able to defeat the Koi Warrior. If you are just getting started, my recommendation is to start with the S750 Kreludan Defender Robot. It’s in the Neocola Centre, and at just 14 HP it’s an easy fight that still gives 57.4k NP/day. Then, you can train your way up to fighting the Koi Warrior if you’re so inclined. There are a bunch of guides for how to train and what weapons are good to use. I recommend the weapon sets hosted by the Battlepedia, which are already updated to account for daily quests making some items much cheaper.

One last thing. All analysis earlier assumes challenger-specific items are worthless. There are two major exceptions. First is the Giant Space Fungus. The Giant Space Fungus is in the Cosmic Dome, and when fought on Hard it will sometimes drop Bubbling Fungus, which can be consumed to increase Strength. They sell for 136k NP each. The crowdsourced post from earlier found that Bubbling Fungus was 1% of the item drops. Fighting it gives 0.15 Bubbling Fungus per day, or 20,400 NP/day extra.

The second is the Snowager. It’s in the Frost Arena, and can drop Frozen Neggs. These can be traded directly for Negg points, meaning they can get traded for Sneggs which boost HP. Of all stat boosters, HP increasers are the most expensive, since it’s the only stat that can be increased without limit, and some high-end users like to compete on having the strongest pet. Each Frozen Negg sells for 450k NP at time of writing.

Unfortunately there is not much data around on Frozen Negg drop rates. The best I found is this Reddit post where they fought the Snowager every day between March 30th and June 1st. They got 24 Frozen Neggs in that time, which is an average of 0.375 Frozen Neggs per day. Assuming that rate holds, the Snowager gives an extra 168,750 NP/day. On top of the other Frost Arena items, you’re looking at 200k NP/day!

The one snag is that both of these enemies are among the hardest challengers in the game. The Giant Space Fungus on Hard has 632 HP. The Snowager on Easy has 650 HP. If you are new to pet training, it could literally take you a year and millions of NP to get your pet strong enough beat the Snowager. If your only goal is to earn money, it will pay off eventually, but you’ll need to be patient. If you’re willing to start that journey, I recommend following the route in the Battlepedia guide (train all stats evenly up to level 100 / strength 200 / defense 200, then train only level to 250 to unlock the Secret Ninja Training School, then catch up the other stats). It’ll save you both time and money.

In summary,

- The Battledome can give you a lot of Neopoints per day if you fight until you get 15 items and sell them each day.

- The profit order is Snowager > Giant Space Fungus (Hard) > any Cosmic Dome enemy > any Dome of the Deep enemy > S750 Kreludan Defender Robot

- Most players who don’t have premium will stop at Dome of the Deep and reach 69k NP/day, but if you are willing to commit to training up to Snowager levels you can earn 200k NP/day instead.

Aside: The Eo Codestone Conspiracy

Eo Codestones have consistently been more expensive than other codestones. When asked why, the very common reply is that Eo Codestones drop more rarely.

This has always felt off to me. An Eo Codestone sells for 33,500 NP. A Main Codestone sells for 3,000 NP. You’re telling me an Eo Codestone is over 10 times rarer than a Main Codestone? That seems crazy.

I ran my Battledome sim, and in both the Cosmic Dome and Dome of the Deep, I found that Eo Codestones dropped at a similar rate to every other tan codestone. I then checked my Safety Deposit Box, and saw the same thing - I have about the same number of every kind of tan codestone. And I have a few hundred of each, so I’m pretty sure the sample size is big enough.

The “drops more rarely” argument seems bogus. I have three remaining theories.

- Codestones given out through other means (i.e. random events) are heavily biased towards Eo Codestones. This seems unlikely to me. Most codestones should come from Battledome events these days, so even if random events were biased, they shouldn’t affect the distribution by enough to explain a 10x price difference.

- The Mystery Island Training School asks for Eo Codestones more frequently than other ones. This could explain the difference - similar supply, higher demand. I don’t know of any stats for this, but this also seems unlikely to me. At most I could see a codestone getting asked for 2x as often as another one, not 10x as often.

- The Eo Codestone price is heavily manipulated to keep an artificially high price. This seems like the most likely theory to me.

Unfortunately, knowing the price is likely manipulated doesn’t mean I can do anything about it. The free market’s a scam, but it’s the only game in town.

(July 2024 EDIT: I’ve since learned that although Cosmic Dome and Dome of the Deep have even drop rates, the Frost Arena does not. Either intentionally or unintentionally, the Frost Arena does not drop any Eo Codestones, instead dropping 2x as many Main and Mag codestones instead. I just never tested this. Looking at JellyNeo prices, those are the two cheapest tan and red codestones respectivly. So, there doesn’t have to be a market manipulation conspiracy. Instead, the high price of Eos can be explained if most active players fight the Snowager instead of easier enemies. With the change to allow infinite rerolling of Training School quests, the prices of codestones should flatten, and none of this will matter.)

Wishing Well

The Wishing Well is a place where you toss in Neopoints to make wishes. If you’re lucky, your wish will be granted!

Each wish costs 21 NP, and you can make up to 14 wishes a day (7 in the morning and 7 at night). You may wish for any item rarity 89 and below.

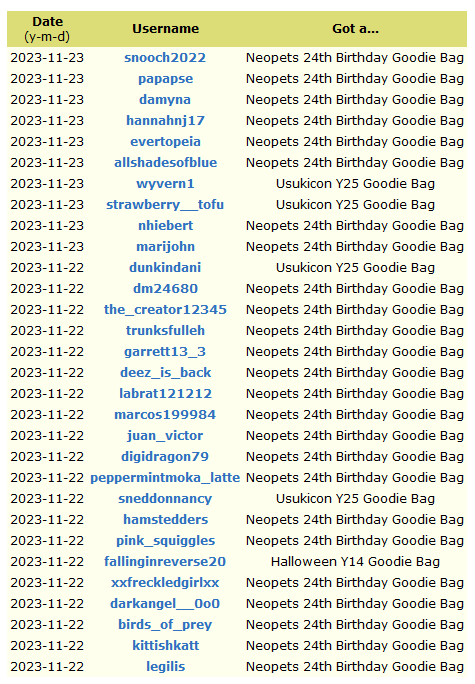

What makes the Wishing Well profitable is that the most expensive rarity 89 or below item is usually way, way more expensive than the wishing cost. When I started doing the Wishing Well, the price of such items was around 300k, but now it’s regularly over 1 million. You can usually find what to wish for by seeing what’s most common in the winner list from yesterday.

As for why it’s so high? The Wishing Well only gives out 20 items a day, and this isn’t enough to make a dent in demand, especially when Neopets keeps releasing new items. Case in point - for Neopets’s 24th birthday, shops started stocking a Neopets 24th Birthday Goodie Bag. It was pretty hard to obtain one thanks to restock botters, but people figured out they were a r79 item and just started wishing for them.

People are asking for 7 million NP on the Trading Post for these bags. I don’t think that will last. For the purposes of estimating value, let’s assuming a Wishing Well item is worth 1 million NP. I’d say I win an item from the Wishing Well about 3 times a year. Then the expected earnings are 3 million NP per year, or 8,219 NP/day. It costs 294 NP to make the wishes, so this is 7,925 NP/day.

The more people who make wishes, the less frequently anyone’s wishes will be granted. I did consider not mentioning the Wishing Well to preserve profit for myself, but I figure the effect isn’t that big, and it’s not that much of a secret anyways.

Bank Interest

In real life, the interest rate you get in a savings account is driven by the Treasury’s interest rate, which is based on a bunch of complicated factors over what they want the economy to look like.

In Neopets it’s driven by how many Neopoints you deposit. The more you store, the more interest you get. At the top-most bracket (10 million Neopoints), you earn 12.5% interest per year. You may be thinking “doesn’t this promote rich-get-richer?” and yep you’re entirely right. You may also be thinking “isn’t 12.5% interest per year a lot?” and yep, you’re also right.

This is never going to be a big part of your daily income unless you have a ton of money already, and if you already have a ton of money I don’t know why you’re reading this guide. I mention it because it’s the floor for any money making method that doesn’t convert into cash on hand. Such as…

The Stock Market

Ahh, the stock market. According to legend, stock prices used to be driven by user behavior. Then some users coordinated a stock pump, and it got changed to be entirely random. This didn’t stop people from posting “to the mooooon” on the message boards, especially during the Gamestop craze of 2021.

Each day, you can buy up to 1,000 shares of stock. You’re only allowed to buy stock that’s at least 15 NP/share (or 10 NP/share if you have the Battleground boon - more on that later). You can sell as much stock as you want, paying a 20 NP commission per transaction. (In practice this commission is basically zero and I’ll be treating it as such.)

If stock motion is entirely random, how can you make money? You can think of stock prices like a random walk. Sometimes they drift up, sometimes they drift down, but you only realize gains or losses at the time you sell. So you simply hold all the unlucky stocks that go down, and sell the lucky stocks that go up.

The common advice is to set a sell threshold, and sell only when the stock crosses that price. The higher your threshold, the more money you’ll make, but the longer you’ll have to wait. Conventional wisdom is to sell at 60 NP/share. But how accurate is this wisdom? A lot of analysis has been done by users over the years, including:

- A histogram of price movements from JellyNeo.

- A neostocks.info site that lets you check historical stock prices

- Corresponding analysis of neostocks data by u/not-the-artist on Reddit.

This data all suggests the conventional wisdom of selling at 60 NP/share is correct, since price movement is dependent on current price, and the 61-100 NP range is where average price movement changes from net 0 to slightly negative. That threshold is the point where you start losing money due to missing out on bank interest.

The cause of this was eventually revealed by u/neo_truths’s leak of the Stock Market pricing algorithm.

Set max move to current price / 20

Set max move to current price / 50 if current price > 100

Set max move to current price / 200 if current price > 500

Set max move to current price / 400 if current price > 1000

Round up max moveSet variation to random between 1 and max move * 2 - 1. Randomly add +1 to variation with 1/20 chance. Randomly subtract 1 with 1/20 chance.

If current price >= 10 and current price / opening price > 1.15 [subtract] max move / 4 rounded down from variation

If current price >= 10 and current price / opening price > 1.3 [subtract] max move / 4 rounded down from variationSet points to variation - max move rounded down

Set p to min(current price * 10, 100)

If points + current price > 5, change stock price to current price + points with p% chanceSome re-analysis by u/not-the-artist is here, confirming this leak is consistent with what was seen before. The TL;DR for why 60 NP is the magic number comes from these two lines.

If current price >= 10 and current price / opening price > 1.15 [subtract] max move / 4 rounded down

If current price >= 10 and current price / opening price > 1.3 [subtract] max move / 4 rounded downThese are the only sections of the pricing algorithm that are negative on average. Thanks to rounding, these conditions only fire when

max moveis at least 4, and if we look above, this only starts happening in the 61-100 NP range.Using a 60 NP sell threshold will lead to an average holding time of 399 days. When bank interest is accounted for, 1,000 shares of 15 NP stock is worth around 29,550k NP. (It is lower than 60k NP because you miss on 399 days of bank interest - full math is done here for the curious). This gives a profit of 14,550 NP/day, although you will have to wait over a year per buy to convert it back to cash-on-hand.

Look at it this way - you’re making your money work for you. Also there’s a free avatar for getting to 1 million NP in the stock market, which you’ll easily hit if you wait until 60 NP to sell. I’ve got about 6 million NP tied up in the market right now.

Coconut Shy

This is one of many gambling minigames themed around ones you’d see in amusement parks. Like amusement parks, most of the games are scams. Coconut Shy is one of the few exceptions.

You can throw 20 balls per day for 100 NP each, earning one of five outcomes.

- A miss (0 NP)

- A small hit (50 NP)

- A strong hit that doesn’t knock over a coconut (300 NP)

- Knock over a coconut (10,000 NP + a random Evil Coconut)

- The coconut explodes (Jackpot! 500,000 NP)

The two outcomes worth money are the last two. The jackpot is obviously good, but the Evil Coconuts are actually worth more. Every Evil Coconut is a stamp, and some Neopets users love collecting stamps. Right now, a given Evil Coconut sells for between 750,000 NP and 1,000,000 NP.

Coconut Shy odds were leaked by u/a_neopian_with_info in this Reddit post. Let’s assume you use the Halloween site theme, which slightly improves your odds. The payout table is

Payout Probability 0 NP 20% 50 NP 65% 300 NP 14.9% 10,000 NP + Evil Cocunut 0.99% 500,000 NP 0.01% If we value evil coconuts at 750k NP, this is an expected payout of 834.6 NP per throw. Each throw costs 100 NP, so it’s 734.6 NP profit per throw, and doing 20 throws gives 14,692 NP/day. This has pretty high variance. On average, you’ll only win a worthwhile prize every 50 days.

If you decide to go Coconut throwing, you’ll need to use the direct link from JellyNeo. The original game used Flash, and it was never converted after the death of Flash, but you can still directly hit the backend URL that the Flash game would have hit.

I am honestly surprised the Evil Coconuts are still so expensive. My guess is that most users don’t know Coconut Shy is net profitable, or the ones that do can’t be bothered to do it.

Faerie Caverns

Spoiler alert: Faerie Caverns are just a more extreme version of Coconut Shy.

Each day, you can pay 400 NP for the right to enter the caverns. You’ll face three “left or right?” choices in the cave, with a 50% chance of either being right. If you guess right all three times, you win a prize!

If you don’t, you get nothing and have to try again tomorrow.

People do this daily because of the Faerie Caverns stamp, which is only available from this daily and sells for around 50 million NP. However, your odds of winning it are very slim. You have a 1 in 8 chance of winning treasure, and according to a u/neo_truths leak, the payouts for doing so are as follows.

Payout Probability 500 NP to 2,500 NP 89.9% 5,000 NP 4.9% 10,000 NP 5.1% 25,000 NP + item prize 0.1% Of the item prizes, you have a 10% chance of getting a Faerie Paint Brush, and a 90% chance of winning one of Beautiful Glowing Wings, Patamoose, Faerie Caverns Background, or Faerie Caverns Stamp. You can see in the odds that Faerie Paint Brush is considered the best prize, so it’s funny that every other prize is worth more.

Prize Estimated Price Faerie Paint Brush 1,200,000 NP Beautiful Glowing Wings 2,000,000 NP Patamoose 3,000,000 NP Faerie Caverns Background 2,000,000 NP Faerie Caverns Stamp 50,000,000 NP If you do the expected value math, then doing Faerie Caverns is net profitable. The expected earnings work out to 1,772 NP/day. After accounting for the 400 NP cost, the Faerie Caverns are worth 1,372 NP/day.

This really isn’t that much, it’s the same as playing some Flash games but with more gambling involved. The positive expected value is entirely dependent on winning an item prize, which is a 1 in 1000 chance after passing a 1 in 8 chance of reaching the treasure. Neopets is about 8700 days old. You could have played Faerie Caverns every day for Neopets’s entire existence, and it would not be surprising if you never won an item prize.

For that reason, I don’t do the Faerie Caverns daily. Still, it is technically worth it if you have higher risk tolerance. 400 NP a day is pretty cheap.

Battleground Boons

The Tyrannian Battleground is an ongoing site-wide event. Every 2 weeks, team signups are open for 1 week, then you fight the 2nd week. If you fought at least 10 battles, and your team wins, everyone on your team can choose a boon that lasts during the next cycle’s signup period (lasts for 1 week).

Some of these boons are important modifiers to previous money-earning methods.

- The Bank Bribery boon increase bank interest by 3%.

- The Cartogriphication boon tells you which direction to go in the Faerie Caverns, making your odds of treasure 100% instead of 12.5%.

- The Cheaper by the Dozen boon lets you buy stocks at 10 NP instead of 15 NP.

In general, these boons are nice but I don’t think they’re worth going for. We can math them out as follows.

- Ignoring compound interest effects, Bank Bribery is worth (3% / 365) * (net worth) per day.

- For Cartogriphication, your expected payout gets multiplied by 8. This makes the Faerie Caverns worth 14,174 NP/day. Subtracting the default 1,772 NP/day value, we get an increase of 12,402 NP/day.

- The Cheaper by the Dozen boon will save 5,000 NP/day when buying stock.

We see that Cartogriphication is the best boon if you are risky, Cheaper by the Dozen is the best boon if you aren’t, and Bank Bribery is the best boon if you are rich. For the last one, you’ll need to have over 60.8 million for an interest increase larger than Cheaper by the Dozen, or 150.9 million to see an interest increase larger than Cartogriphication’s value.

At best, you’ll only have a battleground boon every other week, and it’s not even guaranteed you win a boon. The winning team in the battleground is the team with highest average contribution, not highest total contribution. Very often, the team with the best boons has the most freeloaders that do the bare minimum and hope to get carried to a win. Every freeloader makes it harder to win. At this point I’ve mostly stopped trying to go for boons.

There is one very narrow use case where I’d say the Battleground boons are worth it. The Reddit user u/throwawayneopoints did a detailed analysis of the Double Bubble boon. This boon will randomly let you get a 2nd use out of a single use consumable potion. People assumed this only applied to healing potions, which would be worthless, but that analysis showed it also applies to stat-boosting potions and morphing potions. The refill rate is 25%, and stat boosters can be pretty expensive, so it’s pretty easy to make Double Bubble worth it. (For example, if you use 10 Bubbling Funguses for pet training, Double Bubble will save you around 340,000 NP on average.)

The Neopian Lottery

Okay, no you should not do this one, but it’s funny so I’ll give it a quick shoutout.

The Neopian lottery is a very classic lottery where you buy tickets to contribute to a common jackpot paid to the winners. You pick six numbers between 1 to 30, then hope. What makes the Neopian lottery special is that it always pays out, splitting the jackpot evenly among winners in the case of a tie in number of matching numbers.

The jackpot has a 5k starting pot, so, very technically, this is a positive expected value lottery. A lottery these days will have around 4000-5000 tickets sold, so your expected value is like, 1 NP/ticket, which is really not worth your time. But I think it is just a perfect symbol of Neopets dailies. It’s a random slot machine, that is slightly rigged in your favor.

Amusingly, the JellyNeo guide features this joke.

Normally this would be good advice, but in this specific instance, if you crunch the numbers, the statistics say the lotto is worth it. Have you taken a statistics class?

If You Do Everything…

Daily Quests 46,400 NP/day Food Club (assuming 15 year old account) 88,000 NP/day Trudy's Surprise 17,915 NP/day Battledome (Dome of the Deep) 69,046 NP/day Wishing Well 7,925 NP/day Stock Market 14,550 NP/day Coconut Shy 14,692 NP/day Faerie Caverns 1,372 NP/day Total 259,900 NP/day In total, we are looking at 7.797 million NP/month if you commit to doing everything. That’s a good deal better than just playing Flash games.

Given the amount of time I’ve already spent researching NP making methods, I believe I’ve covered everything important, but if you think I missed something, feel free to comment. I’m hoping this post was a helpful resource for your Neopets needs. Or your needs to…see someone do a lot of research into things that don’t matter? I’ve watched Unraveled, I understand the appeal. Whichever need it was, I wish you luck on your wealth accumulating journey.

-

-

Everfree Northwest 2023

CommentsEverfree Northwest is a My Little Pony convention in the Seattle area. It’s been running for 11 years and counting.

I told myself I was done with pony conventions after BronyCon 2019. I didn’t have long term friendships in brony fandom - what I learned long ago is that conventions are a good way to make introductions and meet people, but they don’t substitute the work of actually keeping up and maintaining friendships. This has come up in the show itself - see Amending Fences, an episode exploring the repurcussions of Twilight leaving her friends from the pilot without saying goodbye, and coming back 5 years later. It’s a common fan favorite.

Without the “I only see you at cons” dynamic that I get from ML conferences, it didn’t feel like there was much that My Little Pony cons had left to offer.

Except, I heard that with the ending of BronyCon, Everfree Northwest is the largest pony convention left. I’d never been to Everfree before, and its continued existence was not guaranteed. Surely I should check it out? And I knew friends in the Seattle area I could catch up with if I stopped by…

I went back and forth and back and forth and eventually decided, screw it, let’s go. It’s worth going at least once.

My General Reaction

Oh no, I had fun.

Oh no, Everfree Northwest actually had slightly more attendees this year than last year.

From an objective standpoint, EFNW was not that much better than previous MLP convention experiences I’ve had. But I still thought it was worth it??? If I don’t need an amazing experience to decide these cons are worth attending, and their attendance stays stable…am I going to pony conventions for all eternity? I thought I was done with these events! IS THIS MY LIFE NOW???

OH NO

“Steve, do you know the first thing I said to myself when I realized I was a brony?”

“What?”

I look him dead in the eye, and say “Shit.”

ACRacebest, describing his appearance on “Is it Weird?” on The Steve Harvey Show

“I am Cringe, but I am Free” - Socrates, probably

The classic first sight at any MLP convention is a furry in a fursuit. Every brony will tell you that bronies are not furries. Every 2023 brony will tell you that at this point there’s a pretty strong overlap.

Still, these cons are a low judgment zone. Not zero, but low. There’s definitely a vibe of “finally, I can express myself”. I don’t really try to hide my brony fandom, but I don’t go out of my way to express it either. During the con, I talked to one person who felt similarly, although he said he doesn’t wear pony shirts for personal safety reasons. He lives in a red state and isn’t sure what would happen if he was more “out” about it. My suspicion is that the majority of people would not care, there are plenty of out-and-proud bronies that live in Republican areas, have served in the military, etc. However, this is one of those things with “few idiots ruin everything” dynamics, and I can understand their choice.

These cons always have a bit of “nerd bleedover”, so to speak. One of the first events of the con was purely a meet-and-greet “make new friends” panel, for people willing to meet new people but unsure how to start conversations. (“I’m in this photo and I don’t like it.”) I ended up in many, many more Marvel and gaming discussions than expected.

Guests of Honor

If you’ve never been to a fan convention at all, one commonality is actors coming to talk about their show experience, meet fans, and sell autographs. This year, most of the people giving autographs were from G5.

A quick recap: the My Little Pony franchise is split into different “generations”. The original 1980s show is retroactively called Generation 1, and it’s counted up since then. My Little Pony: Friendship is Magic is Generation 4, or G4, and is the one that kicked off the brony fandom. Some people who continued to the G5 reboot, and…it’s controversial. I watched the movie, and it struck me as “obviously flawed, but has glimmers of true quality.” I am very basic so Izzy’s my favorite. If you watched G4, she’s Pinkie Pie, except she’s a unicorn and more grounded. If you haven’t, think Luna Lovegood.

I haven’t watched anything else from G5 yet because people told me it’s not up to G4 standards. Still, it’s found its fans, and I’ve heard the quality is trending up.

Cons and fan artists have started featuring more G5 content, but the G4 mainstays still have a big chokehold. The surprise guests this year were Nicole Oliver and Tabitha St. Germain, two of the Friendship is Magic voice actors, and their lines were very obviously longer than any of the G5 voice actor lines. Some voice actors from The Owl House got invited too. Their lines were also short. People like The Owl House, I have yet to hear anything bad and there’s lots of fanbase overlap. But people just aren’t fans of The Owl House in the way they’re fans of Friendship is Magic.

In the future, it’s possible cons will continue to drift towards generic animation to help pay the bills. I think this is a bit sad, but may be a necessary tax to keep the Friendship is Magic parts going.

A Programming Break

There’s a lot of software engineers in the brony fandom. It’s not obvious why, but it leads to cool projects, I won’t complain.

At the Pony Programming Panel, there were multiple presentations on MLP projects. My favorite is easily PonyGuessr, a game about IDing My Little Pony episodes from a single still frame. I’ve tried playing this for fun, and without Google I can manage a 50% hit rate if I give myself infinite time to make a best guess. (You can access all episode titles and synopses, so even a vague guess on the season or plot is enough to narrow down the exact episode by brute force.) Of course there is a speedrun category, it was kicked off by Tridashie putting up a $2000 prize pool for fastest times. It is already terrifyingly optimized and at the con I saw people hitting 90% accuracy with 10 seconds of thinking time - which is not at all competitive with world record runs.

(click for full size)

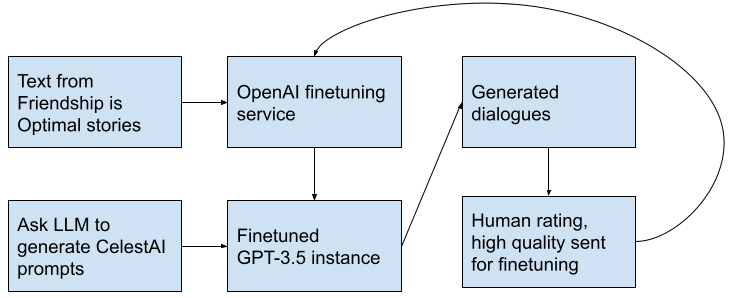

Another project was from someone trying to finetune an LLM to act as CelestAI from the Friendship is Optimal-verse. To very briefly paraphrase the presenter, “Friendship is Optimal is a fanfiction series set on Earth, where a superintelligent AGI named CelestAI is put in charge of a My Little Pony MMO. It’s told to maximize user satisfaction through ponies.” There is an entire subgenre of “people concerned about AI alignment write fanfiction” and the story unfolds in the way you’d expect of that genre. Now, this project in particular was born from someone deciding they wanted CelestAI in their life. I’m not quite sure that’s the right reaction to have after reading Friendship is Optimal, but it was interesting to see someone reinvent a synthetic data pipeline.

The output isn’t that bad, although it definitely suffers if you stray from the narrow path of talking about ponies.

I’ve talked about generative AI in My Little Pony before, and I think the reason these projects come from the fandom is that the MLP fandom has the right combination of appetite and technical experience to make these projects more than pipe dreams.

The last project presented was, well, I’d describe it as more of a therapy session. The presenter self-introduced themselves as “an Amazon engineer that works on things that don’t matter”, then went on a rant about 8 years of poor tech decisions they made, why they were declaring tech debt bankruptcy, and why most programming languages are awful. I learned nothing.

Twilight’s Book Nook

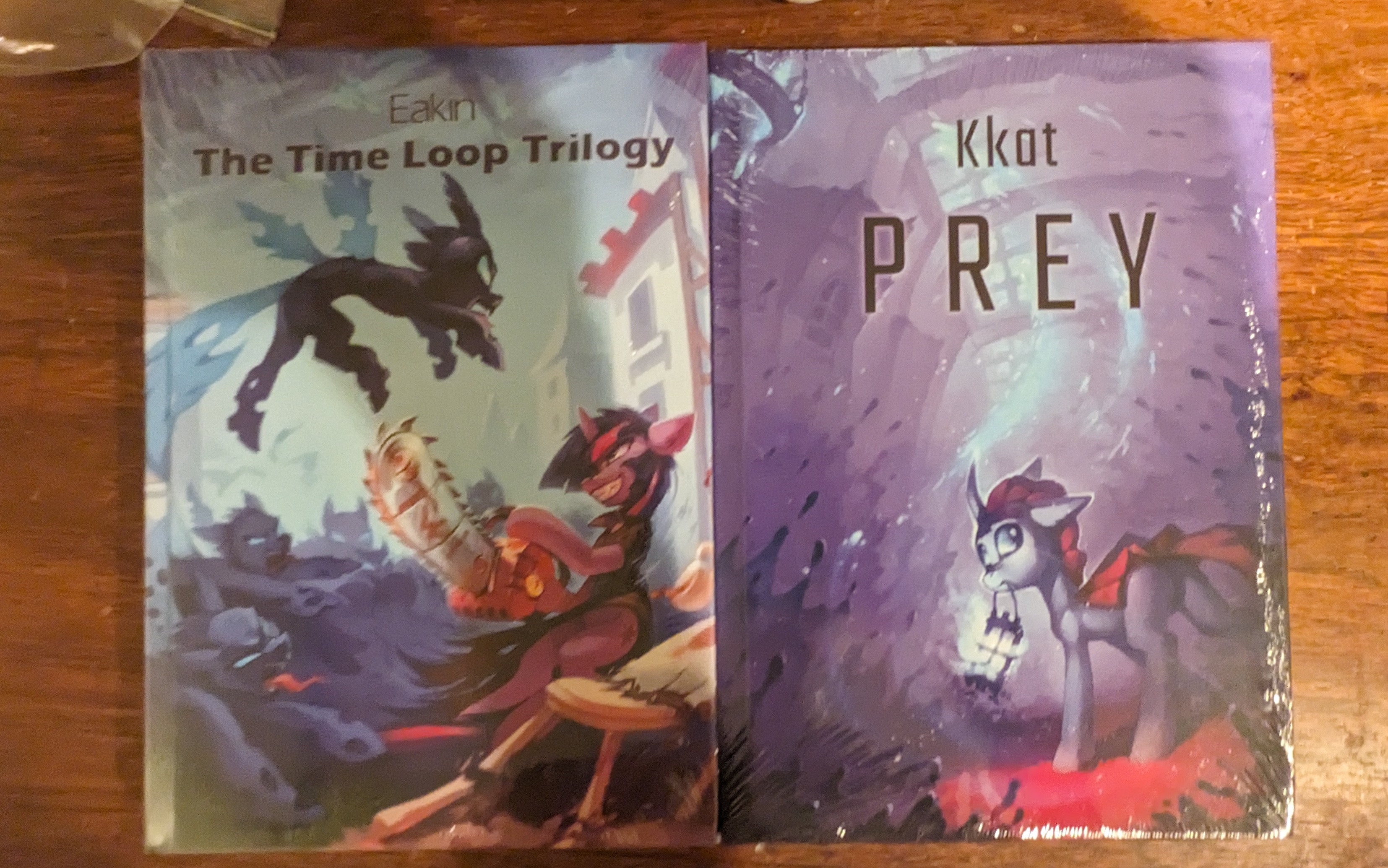

There’s a lot of My Little Pony fanfiction out there. It’s slowing down, but there are at least 3 fan projects based on publishing fanfiction at-cost. The big one I followed for a bit was Ministry of Image, named after an organization in kkat’s Fallout Equestria. Their books looked great, but their main issue was that they shipped out of Russia, which meant a large shipping cost pre-Ukraine and an “uhhhhh” post-Ukraine.

Twilight’s Book Nook is, roughly, an aggregator that gets books from the different publishing projects, and then goes to conventions to advertise and sell them. The books aren’t actually cheaper, they pass on the Russian shipping cost to con-goers, but I decided to take the chance to pick up some copies I was interested in.

I haven’t read Prey yet, and got it almost exclusively off it being written by kkat. Fallout Equestria is easily my favorite MLP fanfic and I’ll defend it as genuinely high quality outside its fanfiction trappings. “We were racing apotheosis and we were losing” still lives rent-free in my head. Hopefully Prey lives up to those expectations. The thing I appreciate about kkat is their concise style and character building, avoiding the meandering fluff that tends to plague fanfic.

“How Long Are You Out Again?”

All the pony conventions I’ve been to have raves at night. Everfree Northwest’s was called Ponystock.

Are these raves good? Ehhhhhhh. I’d say that question is missing the point. The point of these events is to give people an experience they wouldn’t get elsewhere. The average brony is not going to go to an EDM concert, and the average brony musician is not going to get a chance to perform for such a specific crowd of pony enthusiasts. I went to one of the “My Little Karoake” sessions and it was great. Where else will you get a group singalong of “You’ll Play Your Part”?

Even then, the amount of “pony music” is always a point of contention. People tend to assume that “pony concert” means “remixes of pony songs”, or at least “overt references to My Little Pony”. These days, most of the pony musicians are people who take inspiration from the show, but basically write whatever music they want. It is very common for people to do instrumental music with no clear connection to MLP at all. People who met for different reasons but now celebrate each other.

I wrote the lyrics myself which also was a first time for me and I wanted the song to be something where anybody can imagine someone special who this song is for. So at first it doesn’t feel like a pony song and you could play it in front of a big crowd and people will love it.

But secretly I wrote this song about Twilight haha ;)

AnNy Tr3e, on “You Are My Star”

If you haven’t been following the brony music scene, this can be a bit disconcerting. Personally the brony music scene is one of the few parts of the fandom I keep up to date with, so it works out for me. I used to look down on the pony raves, because they look lame, and then over time decided that I should stop worrying about that and just go dance poorly with everyone else. To quote ANTONYMPH,

Don’t care you think it’s cringe, because it’s not your life

I was out until 2 AM every night, to the consternation of the people I was staying with.

By the way, if you haven’t seen the music video for ANTONYMPH yet, and were someone who grew up on the Internet in the 2000s or early 2010s, I highly recommend watching it. It has many very targeted references to that era of viral culture. ANTONYMPH has had a surprisingly strong impact on the fandom (I saw some ANTONYMPH music video cosplays this year), and I’d say it’s because the brony fandom has existed long enough to have nostalgia for itself and where it came from. At the vendor hall, I came across someone reselling Neopets merch, and I’d say that’s more for age overlap reasons than fandom overlap reasons.

Tonight We Gonna Party Til The Party Don’t Stop

Not everyone at a pony con is over 21, but you get enough adults of the right age together and people will want to drink.

The way Everfree Northwest handles this is with a dedicated “party floor”. A few conference suites at the hotel hosting the event are designated as party rooms. Community organizers will host late night parties out of those rooms. The idea is to control the degeneracy, by centralizing the drinking in a place with good norms. All these parties will have the same rules:

- Everyone’s ID gets checked. No exceptions.

- If you take a drink, you finish it or pour it out before leaving the room. There are plenty of minors at pony conventions and con staff wants no part in enabling underage drinking.

- Someone trained on first-aid for drunk people will be around all night.

- No one can charge for alcohol. I believe this is because of liquor license reasons, so it means all rooms run on a donation-only system.

You run into some weird people at these parties. The Bay Area pony convention often has a party run by a group of Trekkies who decorate the room in Klingon gear. I’ve been told it’s part of their conversion process for spreading the good word? And then at Everfree I ended up in the BerryTube room, which grew from a weekly My Little Pony drinking game to a bunch of people who group watch random MLP-adjacent videos, chant “HAIL HYDRATE” every so often, and insist they aren’t a cult. You know, standard stuff. They’re named after Berry Punch, the character that’s an alcoholic (or at least, as far as you can imply that in a kid’s show).

I learned all this from socializing with a member. “Yeah, we have people from all over. A few people from Europe. No Asians though-“

At which point a BerryTube regular said “OH MY GOD how can you say that to an Asian person” and then we laughed as he desperately tried to clarify the context and failed. The interrupter said I shouldn’t worry, she couldn’t be racist towards Asians because she had a black trans boyfriend who was really into K-pop. I mentioned that I was friends with a black gay guy who was into K-pop, and we briefly shared common ground over our token black LGBT Koreaboos.

There Was a MLP Card Game?

Yes! It also…has a surprising amount of complexity to it?

I went to one of the “learn to play” events since I had the free time and was curious. And, I don’t think there’s an easy summary of how the game works. It’s a little like Commander, where every deck has a double-sided “Mane Character” that always starts in play, but it can “level up” when you meet a condition. It’s a little like Marvel Snap, where there are zones that you compete over and earn points if your total power is stronger, but the zones are determined by your deck, so an aggro / control deck can pick zones appropriately. Then there’s way more rules on top of that. If I actually had someone to regularly play it with, maybe I’d buy the cards for it, but I don’t and the game’s out of print anyways. Still a bit of a trip to hear about a Princess Cadance Reanimator deck though. Those are not the words I expected to fit together.

The Charity Auction

The last event before closing ceremonies was a charity auction. This happens at other cons too. Each year the con picks a charity, people donate items to auction off, and they get sold in one big event at the end.

It’s a long-running observation that a lot of furries work in tech. A lot of bronies are in tech too. Combine disposable income with “the money goes to charity” and a desire to flex at a public auction, and you have a recipe for really high bids. $500, for an autographed photo of Tara Strong! $250, for a jar of honey! I have always tried to make these events, because you see cool things go up for bidding. I have never walked away with anything, because my price tolerance is not high enough to compete. I saw a huge bid for some pony themed license plates, and later learned the winner lived in Palo Alto and has a car restoration hobby. You can fill in the blanks from there.

I was only willing to overpay on two items. The first was a signed copy of Ponies: The Galloping, the Magic: The Gathering x My Little Pony crossover. They only printed it once, and I knew they sold on eBay for about $200. So I set a personal bidding cap of $300, and they sold for $500.

The second was a plushie and set of red envelopes with My Little Pony characters on the front, signed by a voice actor when they were promoting the MLP movie in China. They’re red envelopes, they’re cheap! Sure, there’s an autograph premium, but I figured that I could irresponsibly go up to $100 and have a shot.

They ended up going for $350. Ah well. There are ways to signal allegiance besides money.

Speaking of the Chinese brony scene, it only lightly interacts with the English scene and I don’t know much about it. The one native Chinese brony I’ve met said it trends younger, and the scene can face visa troubles when going to the US. I also have a link to a MLP bilibili slang spreadsheet that’s very excellent. Nicknaming Starlight Glimmer “Communist Secretary” hits different when someone from China does it.

FOMO

Unfortunately, I ended up catching COVID from the con.

Now, ahead of time, I had considered the COVID risk and considered it within my risk tolerance. Adaptations of recent strains to be more mild, full vaccination suite with bivalent booster, etc. Symptom-wise, that all played out fine, I essentially just had a mild fever and cough. The part I did not account for was that I’d have to cancel all my planned social meetups while staying-in-place. That sucked. The COVID risk was low but the FOMO risk was high.

I definitely still would have gone to the con if I had given it more consideration, but I would have been a bit more diligent about masking protocols. Ah well. That dampened things a bit, but I will (probably) still be back next year.

-

Eight Years Later

CommentsSorta Insightful turns eight years old today!

I am writing this while traveling and sick with COVID, which is the clearest sign that I am in this for the long haul. I don’t remember where it’s from, but long ago I remember reading a novelist’s guide for how to write a novel. It had a bunch of typical advice, but ended with “sometimes the way to write a novel is to just go write a f***ing novel.” That’s where I’m at with this blog.

I have a bit of an ADHD relationship with writing, where I will go a long time without writing anything, then stay up until 5 AM revising a post. Getting started is hard but sustaining effort is easy.

Highlights

I’m done with writing MIT Mystery Hunt! Freedom!

The post about it was pretty rewarding to write, although “post” may be the wrong word. Credit to CJ for noting that the post was long enough to pass NaNoWriMo.

Did it need to be that long? Eh, probably not, but I kept having ideas I wanted to put in. I also knew I was not in a mood to revise it to be shorter. The revisions I did were focused on grammar, word choice, and overall flow, rather than deciding if an idea should be cut. (Feel free to draw a comparison to the Hunt itself if you want.)

To be honest, I do not expect to write a post of that length again. I started working on that post right after Mystery Hunt 2023 finished, and hardcore focused on getting it out ASAP. “As soon as possible” turned out to be 3 months, ending right when planning for ABCDE:FG ramped up. The end result was that I was exerting “write Mystery Hunt” levels of effort (10-20 hrs/week) for 1.5 years straight. That was just too much, and I burned out on doing anything with my free time besides vegetating.

My burnout lined up almost exactly with getting a copy of The Legend of Zelda: Tears of the Kingdom. Let’s say my escapism into TotK was especially therapeutic. I’m done with the game now, and am still adjusting to not having a “default” action taking up mindshare. “I should write a puzzle”, “I should write about writing puzzles”, or “I should go find more Koroks” have been like, the only three thoughts I’ve had outside of work since last year. Gotta have more thoughts than those!

Statistics

Posts

I wrote 6 posts this year, down from 7 last year. In my defense, this is a bit misleading, especially if I pull up the time stats.

Time Spent

I spent 189 hours, 45 minutes writing for my blog this year, around 1.9x as long as last year.

Around 170 hours of that was on the Mystery Hunt retrospective post.

View Counts

These are view counts from August 18, 2022 to today.

259 2022-08-18-seven-years.markdown 372 2022-10-01-generative-modeling.markdown 333 2023-01-20-mh-2023-prelude.markdown 1929 2023-04-21-mh-2023.markdown 114 2023-05-09-bootes-2023.markdown 153 2023-07-19-ml-hurry.markdownPosts in Limbo

Post about Dominion Online:

Odds of writing this year: 5%

Odds of writing eventually: 25%My priorities are moving away from Dominion. This may be the first year I skip the yearly championship, I’m not too interested in grinding my skill level back up. Still, I can’t let go of writing this eventually.

Post about Dustforce:

Odds of writing this year: 20%

Odds of writing eventually: 60%I still think Dustforce is one of the best platformers of all time. I’ve been playing a lot more Celeste recently thanks to Strawberry Jam, but Dustforce still does some things that no one else has replicated in a satisfying way.

Post about puzzlehunting 201:

Odds of writing this year: 50%

Odds of writing eventually: 90%This is a post I’ve been considering for a while. There are introduction to puzzlehunt posts, a so-called “puzzlehunt 101”, but there is no “puzzlehunting 201” for people familiar with the basics and interested in solving faster. I was going to write this last year, embedding puzzle content in it for Mystery Hunt, but didn’t have a solid puzzle idea and got too busy. Now I’m less busy.

Post about AI timelines:

Odds of writing this year: 90%

Odds of writing eventually: 99%I’m not planning a big update to the last timelines post. I just think it’s time to review what I wrote last time, given that it’s been 3 years. (And selfishly, because I think I called a lot of things correctly and want to brag about that.)